-

라즈베리파이를 이용한 자율주행 로봇 연동 제어 (Line Tracer)프로젝트/자율주행 자동차 제작 프로젝트 2020. 9. 2. 17:54

💡Line Tracer란 무엇일까요?

단어 그대로입니다! 정해진 선(Line)을 따라가는(Tracer) 것으로, line following이라고도 불립니다. 주로 산업용 AGV(Automatic Guided Vehicle)에 사용된다고 하네요. 주행선을 센서로 검출하여 목적지까지 선을 따라 이동하는 원리입니다.

이 포스팅에서는 카메라를 통해 영상을 인식하고, Line Tracer를 활용하여 라인을 추출하여 자율주행 로봇을 제어해보는 실습을 진행해보겠습니다.

이동 간에 목적지 위치를 지속적으로 수신하기 위해서 GPS 센서를, 장애물들을 회피하기 위하여 이전 포스팅에서 사용했던 초음파 센서를 접목시키면 주행의 정확도와 안전성을 향상시킬 수 있겠죠🤩

Line Tracer의 원리를 적용하여, 이미지에서 주행선을 인식해볼까요?

1. 이미지에서 line 추출하기

2. 동영상에서 line 추출하기 (input video, real-time video)먼저 간단하게 이미지에서 line을 추출해내고, 주행 시 인식해야 할 영역을 지정해줍니다.

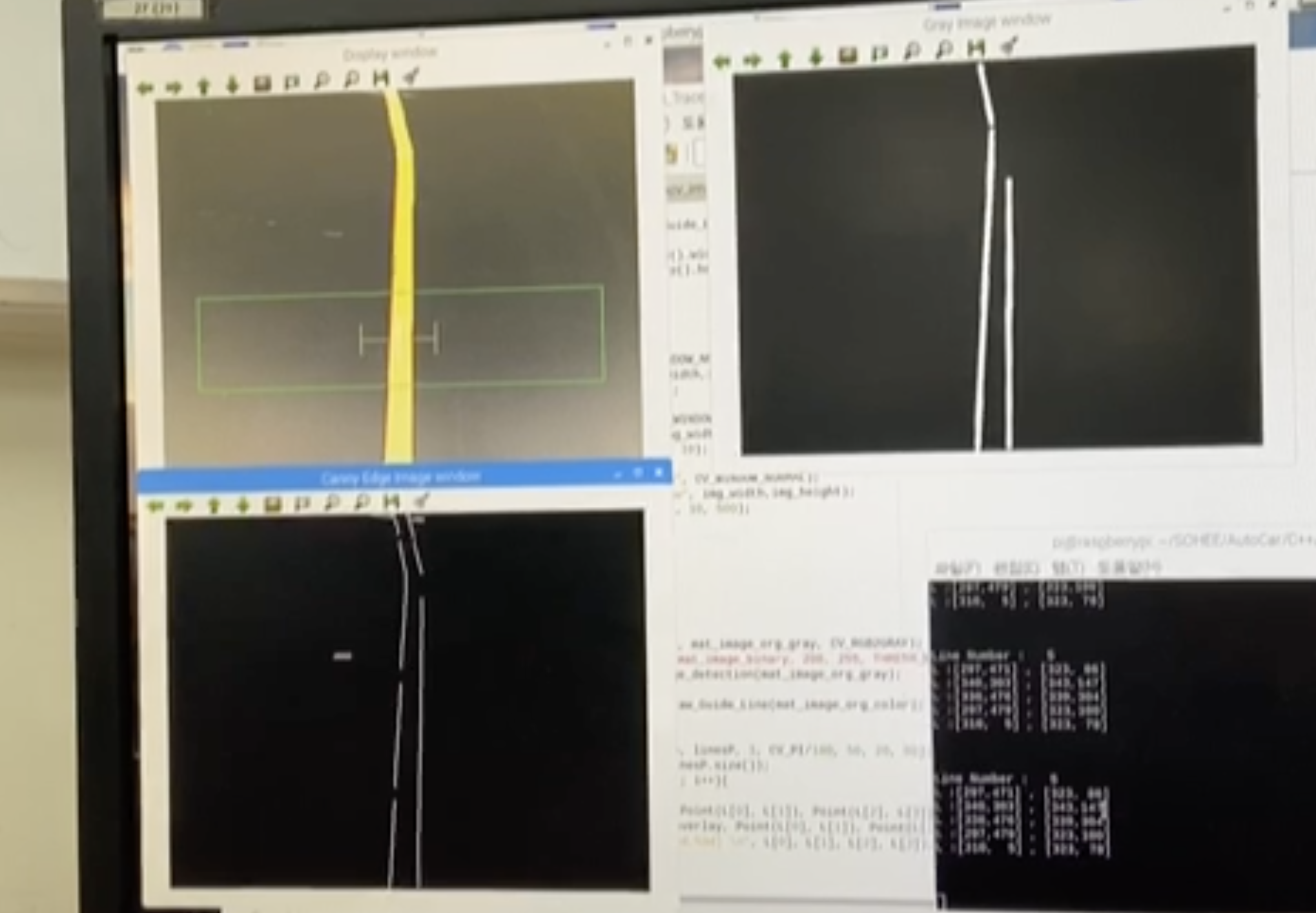

line들과 영역 추출하기 #include <stdio.h> #include <signal.h> #include <stdlib.h> #include <string.h> #include <wiringPi.h> #include <wiringSerial.h> #include <termio.h> #include <softPwm.h> #include <opencv2/opencv.hpp> #include <iostream> #include <opencv2/opencv.hpp> //image processing using namespace cv; using namespace std; #define IMG_Width 640 #define IMG_Height 480 #define ASSIST_BASE_LINE 320 #define ASSIST_BASE_WIDTH 60 int guide_width1=50; int guide_height1=20; int guide_center = (IMG_Width/2); //GPIO Motor Control #define ENA 1 #define IN1 4 #define IN2 5 #define ENB 0 #define IN3 2 #define IN4 3 #define MAX_PWM_DUTY 100 void sig_Handler(int sig); int GPIO_control_setup(void){ if(wiringPiSetup() == -1){ printf("WiringPi Setup error!\n"); return -1; } pinMode(ENA,OUTPUT); pinMode(IN1,OUTPUT); pinMode(IN2,OUTPUT); pinMode(ENB,OUTPUT); pinMode(IN3,OUTPUT); pinMode(IN4,OUTPUT); softPwmCreate(ENA,1,MAX_PWM_DUTY); softPwmCreate(ENB,1,MAX_PWM_DUTY); softPwmWrite(ENA,0); softPwmWrite(ENB,0); return 0; } void motor_control_r(int pwm){ if(pwm>0){ digitalWrite(IN1,HIGH); digitalWrite(IN2,LOW); softPwmWrite(ENA,pwm); } else if(pwm==0){ digitalWrite(IN1,LOW); digitalWrite(IN2,LOW); softPwmWrite(ENA,0); } else{ digitalWrite(IN1,LOW); digitalWrite(IN2,HIGH); softPwmWrite(ENA,-pwm); } } void motor_control_l(int pwm){ if(pwm>0){ digitalWrite(IN3,LOW); digitalWrite(IN4,HIGH); softPwmWrite(ENB,pwm); } else if(pwm==0){ digitalWrite(IN3,LOW); digitalWrite(IN4,LOW); softPwmWrite(ENB,0); } else{ digitalWrite(IN3,HIGH); digitalWrite(IN4,LOW); softPwmWrite(ENB,-pwm); } } Mat Canny_Edge_detection(Mat img){ Mat mat_blur_img, mat_canny_img; blur(img, mat_blur_img, Size(3, 3)); Canny(mat_blur_img, mat_canny_img, 70, 170, 3); return mat_canny_img; } //화면에 line을 추출할 영역을 그리기 Mat Draw_Guide_Line(Mat img){ Mat result_img; img.copyTo(result_img); rectangle(result_img,Point(50,ASSIST_BASE_LINE-ASSIST_BASE_WIDTH), Point(IMG_Width-50,ASSIST_BASE_LINE+ASSIST_BASE_WIDTH),Scalar(0,255,0),1,LINE_AA); line(result_img,Point(guide_center-guide_width1,ASSIST_BASE_LINE), Point(guide_center,ASSIST_BASE_LINE),Scalar(0,255,255),1,0); line(result_img,Point(guide_center,ASSIST_BASE_LINE), Point(guide_center+guide_width1,ASSIST_BASE_LINE),Scalar(0,255,255),1,0); line(result_img,Point(guide_center-guide_width1,ASSIST_BASE_LINE-guide_height1), Point(guide_center-guide_width1,ASSIST_BASE_LINE+guide_height1),Scalar(0,255,255),1,0); line(result_img,Point(guide_center+guide_width1,ASSIST_BASE_LINE-guide_height1), Point(guide_center+guide_width1,ASSIST_BASE_LINE+guide_height1),Scalar(0,255,255),1,0); line(result_img,Point(IMG_Width/2, ASSIST_BASE_LINE-guide_height1*1.5),Point(IMG_Width/2,ASSIST_BASE_LINE+guide_height1*1.5),Scalar(255,255,255),2,0); return result_img; } int main(void){ int fd; int pwm_r=0; int pwm_l=0; unsigned char test, receive_char; int img_width=640; int img_height=480; Mat mat_image_org_color; Mat mat_image_org_color_overlay; Mat mat_image_org_gray; Mat mat_image_gray_result; Mat mat_image_canny_edge; //line을 그릴 이미지 Mat mat_image_line_image = Mat(IMG_Height, IMG_Width, CV_8UC1, Scalar(0)); Mat image; Scalar GREEN(0,255,0); Scalar RED(0,0,255); Scalar BLUE(255,0,0); Scalar YELLOW(0,255,255); mat_image_org_color = imread("/home/pi/SOHEE/AutoCar/C++/Line_Tracer/1/images/line_1_0.jpg"); mat_image_org_color.copyTo(mat_image_org_color_overlay); mat_image_org_color_overlay = Draw_Guide_Line(mat_image_org_color); img_width = mat_image_org_color.size().width ; img_height = mat_image_org_color.size().height; if(mat_image_org_color.empty()){ cerr << "빈 영상입니다.\n"; return -1; } namedWindow("Display window", CV_WINDOW_NORMAL); resizeWindow("Display window", img_width,img_height); moveWindow("Display window", 10, 10); namedWindow("Gray Image window", CV_WINDOW_NORMAL); resizeWindow("Gray Image window", img_width,img_height); moveWindow("Gray Image window", 700, 10); namedWindow("Canny Edge Image window", CV_WINDOW_NORMAL); resizeWindow("Canny Edge Image window", img_width,img_height); moveWindow("Canny Edge Image window", 10, 500); if (GPIO_control_setup()==-1){ return -1; } //signal(SIGINT,sig_Handler); while(1){ cv::cvtColor(mat_image_org_color, mat_image_org_gray, CV_RGB2GRAY); // color to gray conversion mat_image_canny_edge = Canny_Edge_detection(mat_image_org_gray); mat_image_org_color_overlay = Draw_Guide_Line(mat_image_org_color); vector<Vec4i> linesP; HoughLinesP(mat_image_canny_edge, linesP, 1, CV_PI/180, 50, 20, 50); printf("Line Number : %3d\n", linesP.size()); for(int i = 0; i < linesP.size(); i++){ Vec4i L = linesP[i]; line(mat_image_line_image,Point(L[0], L[1]), Point(L[2], L[3]), Scalar(255), 3, LINE_AA); line(mat_image_org_color_overlay, Point(L[0], L[1]), Point(L[2], L[3]), Scalar(0, 0, 255), 1, LINE_AA); printf("L :[%3d,%3d] , [%3d,%3d] \n", L[0], L[1], L[2], L[3]); } printf("\n\n\n"); imshow("Display window", mat_image_org_color_overlay); //line이 그려진 이미지 띄우기 imshow("Gray Image window", mat_image_line_image); imshow("Canny Edge Image window", mat_image_canny_edge); if(waitKey(10)>0) break; } return 0; } void sig_Handler(int sig){ printf("\n\n\n\nStop Program and Motor!\n\n\n"); motor_control_l(0); motor_control_r(0); exit(0); }모든 영역에 대한 영상처리를 수행할 필요는 없기 때문에, 위 예제에서 설정한 영역(ROI, Region of Interest)에 대하여서만 line 분석을 수행하도록 하겠습니다.

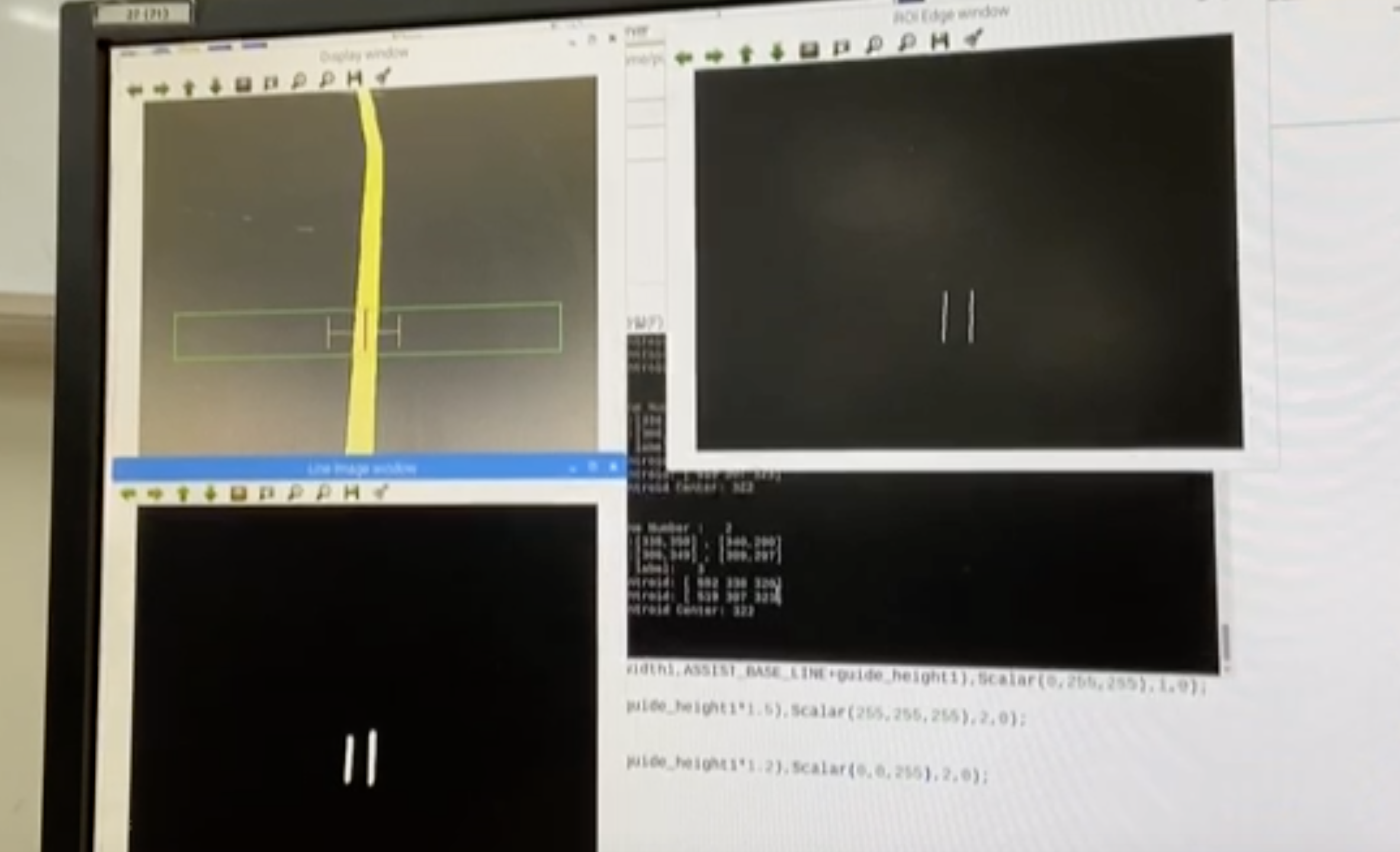

/* ... */ int line_center = -1; Mat region_of_interest(Mat img, Point *points){ Mat mask = Mat::zeros(img.rows, img.cols, CV_8UC1); Scalar mask_color = Scalar(255,255,255); const Point* pt[1] = { points }; int npt[] = { 4 }; //point들로 polygon을 생성 fillPoly(mask,pt,npt,1,Scalar(255,255,255),LINE_8); Mat masked_img; bitwise_and(img ,mask , masked_img); return masked_img; } Mat Draw_Guide_Line(Mat img){ Mat result_img; img.copyTo(result_img); rectangle(result_img,Point(50,ASSIST_BASE_LINE-ASSIST_BASE_WIDTH), Point(IMG_Width-50,ASSIST_BASE_LINE+ASSIST_BASE_WIDTH),Scalar(0,255,0),1,LINE_AA); line(result_img,Point(guide_center-guide_width1,ASSIST_BASE_LINE), Point(guide_center,ASSIST_BASE_LINE),Scalar(0,255,255),1,0); line(result_img,Point(guide_center,ASSIST_BASE_LINE), Point(guide_center+guide_width1,ASSIST_BASE_LINE),Scalar(0,255,255),1,0); line(result_img,Point(guide_center-guide_width1,ASSIST_BASE_LINE-guide_height1), Point(guide_center-guide_width1,ASSIST_BASE_LINE+guide_height1),Scalar(0,255,255),1,0); line(result_img,Point(guide_center+guide_width1,ASSIST_BASE_LINE-guide_height1), Point(guide_center+guide_width1,ASSIST_BASE_LINE+guide_height1),Scalar(0,255,255),1,0); line(result_img,Point(IMG_Width/2, ASSIST_BASE_LINE-guide_height1*1.5),Point(IMG_Width/2,ASSIST_BASE_LINE+guide_height1*1.5),Scalar(255,255,255),2,0); //draw line center line(result_img,Point(line_center, ASSIST_BASE_LINE-guide_height1*1.5),Point(line_center,ASSIST_BASE_LINE+guide_height1*1.2),Scalar(0,0,255),2,0); return result_img; } /* ... */ int main(void){ /* ... */ Mat mat_image_roi_candy_edge; Point points[4]; namedWindow("ROI Edge window", CV_WINDOW_NORMAL); resizeWindow("ROI Edge window", img_width,img_height); moveWindow("ROI Edge window", 700, 10); /* ... */ //ROI(rectangle)의 꼭짓점 points[0] = Point(0,ASSIST_BASE_LINE - ASSIST_BASE_WIDTH); points[1] = Point(0,ASSIST_BASE_LINE + ASSIST_BASE_WIDTH); points[2] = Point(IMG_Width, ASSIST_BASE_LINE + ASSIST_BASE_WIDTH); points[3] = Point(IMG_Width, ASSIST_BASE_LINE - ASSIST_BASE_WIDTH); while(1){ cv::cvtColor(mat_image_org_color, mat_image_org_gray, CV_RGB2GRAY); // color to gray conversion mat_image_canny_edge = Canny_Edge_detection(mat_image_org_gray); //canny edge image에서 ROI를 얻어냄 mat_image_roi_candy_edge = region_of_interest(mat_image_canny_edge,points); //ROI에 대한 hough line 추출 vector<Vec4i> linesP; HoughLinesP(mat_image_roi_candy_edge, linesP, 1, CV_PI/180, 40, 20, 10); printf("Line Number : %3d\n", linesP.size()); for(int i = 0; i < linesP.size(); i++){ Vec4i L = linesP[i]; line(mat_image_line_image,Point(L[0], L[1]), Point(L[2], L[3]), Scalar(255), 5, LINE_AA); printf("L :[%3d,%3d] , [%3d,%3d] \n", L[0], L[1], L[2], L[3]); } //연결되어 있는 여러 line들을 하나의 line으로 labeling Mat img_labels, stats, centroids; int no_label; int c_x,c_y; int c_x_sum=0; c_x = c_y = 0; no_label = connectedComponentsWithStats(mat_image_line_image,img_labels,stats,centroids,8,CV_32S); printf("no label: %3d\n",no_label); for(int i=1;i<no_label;i++){ int area = stats.at<int>(i,CC_STAT_AREA); //label의 면적 c_x=centroids.at<double>(i,0); c_y=centroids.at<double>(i,1); printf("Centroid: [%4d %3d %3d]\n",area,c_x,c_y); c_x_sum+=c_x; } line_center = c_x_sum/(no_label-1); printf("Centroid Center: %3d\n",line_center); printf("\n\n"); imshow("ROI Edge window", mat_image_roi_candy_edge); if(waitKey(10)>0) break; } return 0; }ROI영역 내의 line의 center를 추출해내었죠

ROI영역에서 line center 추출 이제, 주행을 제어할 준비가 다 되었습니다🤩

위의 이미지에서 추출한 line의 중점을 지속적으로 인식하여 모터를 제어하고 주행을 수행하면 되겠죠?

#define PWM_BASE 40 // PWM base value #define P_gain 0.05 // motor control offset int line_tracer_motor_control(int line_center){ int pwm_r,pwm_l; int steer_error=0; //모터를 제어하여 방향을 전환을 수행하기 위한 설정값 steer_error = line_center - IMG_Width/2; //모터 제어 pwm_r = PWM_BASE + (steer_error * P_gain); pwm_l = PWM_BASE - (steer_error * P_gain); motor_control_l(pwm_l); motor_control_r(pwm_r); printf("PWM_L: %3d | PWM_R : %3d\n",pwm_l,pwm_r); } int main(void){ int fd; int pwm_r=0; int pwm_l=0; int img_width=640; int img_height=480; int no_label; int c_x,c_y; int c_x_sum=0; c_x = c_y = 0; Mat mat_image_org_color; Mat mat_image_org_color_overlay; Mat mat_image_org_gray; Mat mat_image_gray_result; Mat mat_image_canny_edge; Mat mat_image_roi_candy_edge; Mat mat_image_line_image = Mat(IMG_Height, IMG_Width, CV_8UC1, Scalar(0)); Mat image; Mat img_labels, stats, centroids; Scalar GREEN(0,255,0); Scalar RED(0,0,255); Scalar BLUE(255,0,0); Scalar YELLOW(0,255,255); Point points[4]; VideoCapture cap(0); cap.set(CV_CAP_PROP_FRAME_WIDTH, img_width); cap.set(CV_CAP_PROP_FRAME_HEIGHT,img_height); if (!cap.isOpened()) { cerr << "에러 - 카메라를 열 수 없습니다.\n"; motor_control_l(0); motor_control_r(0); return -1; } else { cap.read(mat_image_org_color); } mat_image_org_color.copyTo(mat_image_org_color_overlay); img_width = mat_image_org_color.size().width ; img_height = mat_image_org_color.size().height; if(mat_image_org_color.empty()){ cerr << "빈 영상입니다.\n"; return -1; } namedWindow("Display window", CV_WINDOW_NORMAL); resizeWindow("Display window", img_width,img_height); moveWindow("Display window", 10, 10); namedWindow("ROI Edge window", CV_WINDOW_NORMAL); resizeWindow("ROI Edge window", img_width,img_height); moveWindow("ROI Edge window", 700, 10); namedWindow("Line Image window", CV_WINDOW_NORMAL); resizeWindow("Line Image window", img_width,img_height); moveWindow("Line Image window", 10, 500); if (GPIO_control_setup()==-1){ return -1; } //signal(SIGINT,sig_Handler); points[0] = Point(0,ASSIST_BASE_LINE - ASSIST_BASE_WIDTH); points[1] = Point(0,ASSIST_BASE_LINE + ASSIST_BASE_WIDTH); points[2] = Point(IMG_Width, ASSIST_BASE_LINE + ASSIST_BASE_WIDTH); points[3] = Point(IMG_Width, ASSIST_BASE_LINE - ASSIST_BASE_WIDTH); while(1){ mat_image_line_image = Scalar(0); cap.read(mat_image_org_color); cv::cvtColor(mat_image_org_color, mat_image_org_gray, CV_RGB2GRAY); // color to gray conversion mat_image_canny_edge = Canny_Edge_detection(mat_image_org_gray); mat_image_roi_candy_edge = region_of_interest(mat_image_canny_edge,points); vector<Vec4i> linesP; HoughLinesP(mat_image_roi_candy_edge, linesP, 1, CV_PI/180, 40, 20, 10); printf("Line Number : %3d\n", linesP.size()); for(int i = 0; i < linesP.size(); i++){ Vec4i L = linesP[i]; line(mat_image_line_image,Point(L[0], L[1]), Point(L[2], L[3]), Scalar(255), 5, LINE_AA); } if(linesP.size()!=0){ no_label = connectedComponentsWithStats(mat_image_line_image,img_labels,stats,centroids,8,CV_32S); c_x_sum=0; for(int i=1;i<no_label;i++){ int area = stats.at<int>(i,CC_STAT_AREA); c_x=centroids.at<double>(i,0); c_y=centroids.at<double>(i,1); printf("Centroid: [%4d %3d %3d]\n",area,c_x,c_y); c_x_sum+=c_x; } line_center = c_x_sum/(no_label-1); // motor control line_tracer_motor_control(line_center); } printf("\n\n"); mat_image_org_color_overlay = Draw_Guide_Line(mat_image_org_color); imshow("Display window", mat_image_org_color_overlay); imshow("ROI Edge window", mat_image_roi_candy_edge); imshow("Line Image window",mat_image_line_image); if(waitKey(10)>0) break; } return 0; }Real-Time으로 자율주행 로봇을 제어해본 시뮬레이션 영상입니다.

코드를 수정하여 동영상으로도 시뮬레이션을 수행해 볼 수도 있어요👏🏻

'프로젝트 > 자율주행 자동차 제작 프로젝트' 카테고리의 다른 글

라즈베리파이에서 Thread 구현하기 (0) 2020.09.02 OpenCV를 활용하여 Line과 Lane 추출하기 (0) 2020.09.02 서강대학교-현대모비스 : SW인재양성 프로젝트 (0) 2020.09.02